AI’s Impact on Debugging: From Manual Root Cause Analysis to Intelligent Assistants

Introduction: Debugging in the Age of Cloud Complexity

Debugging has always been one of the hardest parts of software engineering. In the cloud era, that difficulty has multiplied. Distributed architectures, async workloads, and transient network failures mean engineers often spend more time finding the root cause than fixing it.

Traditionally, debugging relied on human expertise: someone staring at WinDbg output, grepping logs, or manually correlating App Insights traces. Today, AI is becoming a powerful assistant in this space—reducing toil, accelerating analysis, and even predicting issues before they become outages.

Crash Dump Analysis with AI

Traditional Approach

High-CPU or hang scenarios usually demand WinDbg analysis:

0:000> !threads

0:000> !clrstack

0:000> !syncblk

Engineers then inspect hundreds of threads to identify the “hot path” or deadlock.

AI-Augmented Insight

AI models (like GPT-5 tuned for diagnostics) don’t just parse dumps—they reason over them:

- Thread Signature Clustering: Groups hundreds of stacks by similarity (e.g., 200 threads in SqlClient.TdsParserStateObject.ReadSni).

- Blockage Graphs: Identifies dependency chains (lock A → lock B → blocked worker).

- Knowledge Retrieval: Matches patterns against known incident KBs (e.g., ThreadPool starvation from sync-over-async EF calls).

- Natural-Language Summaries: Explains cryptic frames in plain English: “Threads are waiting on network IO while holding ThreadPool workers, blocking new requests.”

Log and Trace Analysis

Traditional Approach

Engineers query logs with Kusto:

AppRequests

| where ResultCode == 500

| summarize count() by outerMessage, bin(Timestamp, 5m)

AI-Augmented Insight

AI applies temporal and causal inference:

- Sequence Alignment: Compares failing traces to healthy traces, highlighting divergence (similar to DNA alignment).

- Root Cause Attribution: Ranks which event has the highest failure correlation (e.g., “TLS handshake latency >10s occurs in 90% of failed calls”).

- Multi-Layer Correlation: Connects App Insights failures with App Service SNAT exhaustion metrics, something humans often miss.

- Azure Monitor + AI plugins: Surface anomalies with natural-language explanations.

Azure PaaS Case Studies with AI

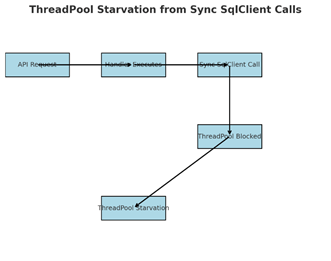

Case A: Thread Pool Starvation

- Symptom: 30s stalls, CPU spikes, API timeouts.

- Dump Evidence:

Thread 74:

System.Data.SqlClient.SqlCommand.ExecuteReader()

- AI Insight:

- Clusters blocked workers.

- Detects sync-over-async EF pattern.

- Cross-checks App Insights timings.

- Generates RCA:

“ThreadPool starvation amplified by synchronous SqlClient calls. This pattern matches EF sync bug families.”

Figure 1: ThreadPool Starvation Flow

AI surfaces not just threads, but the design flaw pattern.

(AppLens detectors for CPU + thread analysis are evolving in this direction.)

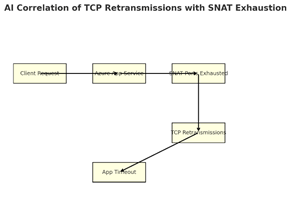

Case B: Intermittent Network Failures

- Symptom: Sporadic disconnects.

- Wireshark Evidence:

[TCP Retransmission] Seq=238 Ack=455

- AI Insight:

- Detects retransmission bursts.

- Maps IPs to subnets → zones.

- Correlates with DNS ENOTFOUND spikes.

- Hypothesis: SNAT port reuse under aggressive scale-out churn.

Figure 2: AI Correlation of TCP Retransmissions with SNAT Exhaustion

AI “connects the dots” across packet capture + DNS + App Service metrics.

(Azure AppLens networking detectors already do correlation across Front Door, App Service, and DNS logs.)

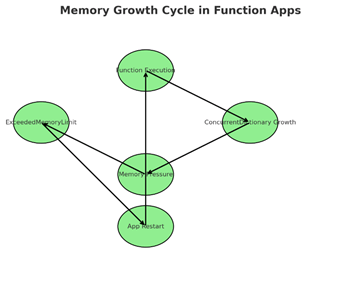

Case C: Memory Growth in Function Apps

- Symptom: App restarts with ExceededMemoryLimit.

- Heap Evidence:

!dumpheap -stat

ConcurrentDictionary+Node<…> 5898777 377 MB

- AI Insight:

- Detects dictionary growth pattern.

- Cross-checks against known OpenTelemetry memory leak issues.

- Suggests fix: upgrade OTel SDK or refactor locking.

Figure 3: Memory Growth Cycle in Function Apps

AI identifies cause → effect → mitigation.

(App Service Memory Leak Detector + AI annotation is a logical future capability.)

How AI Thinks Like a Debugger

AI debugging models operate in layers:

- Pattern Recognition – Recognize common dump/log signatures (like antivirus heuristics).

- Causal Graph Inference – Link telemetry events in time (“SNAT exhaustion → retransmits → timeouts”).

- Knowledge Retrieval – Pull solutions from prior tickets, GitHub issues, or KBs.

- Recommendation Generation – Suggests mitigations (restart, refactor, upgrade).

GitHub Copilot can generate WinDbg command bundles or Kusto queries on the fly.

Risks and Limitations

- Hallucinations: AI may suggest fixes that don’t apply.

- Data Sensitivity: Dump/log ingestion requires governance (Key Vault secrets, PII).

- Overconfidence: Correlation ≠ causation; engineers must validate hypotheses.

The Future of Debugging

- Predictive Debugging: AI detects rising GC heap usage → forecasts OOM in 20 mins.

- Self-Healing: AI auto-recycles a leaking worker if memory signature matches a known bug.

- Cross-Layer Correlation: End-to-end flow: Azure Front Door → App Service → SQL → Storage → downstream APIs. AI identifies weak link.

- Engineer Enablement: Instead of memorizing WinDbg arcana, new engineers onboard via AI-generated RCAs with evidence citations.

- App Service Auto-Heal could evolve into AI-Heal, dynamically tuning configs.

- Azure Monitor Anomaly Detector already points toward predictive debugging.

- Copilot in Azure Portal may soon surface AI-driven insights inline.

Conclusion

Debugging is evolving from manual forensic science to AI-assisted causal analysis.

- Then: Dumps, logs, Wireshark.

- Now: AI clusters, correlates, and hypothesizes.

- Future: AI predicts and self-heals.

The engineer’s role is changing—less time firefighting, more time designing resilient architectures. Those who adopt AI debugging workflows today will shape the future of reliability engineering.

Final Note: AI does not replace engineering expertise; it amplifies it. The most powerful outcomes emerge when AI provides fast hypotheses, ranked evidence, and cross-layer correlations, and human engineers validate, contextualize, and implement durable fixes. Debugging is no longer just about finding what broke—it’s about accelerating resilience through human + AI collaboration.